⚠️ Beware of models: not everything they say is true#

Models are improving fast, but they are not infallible.

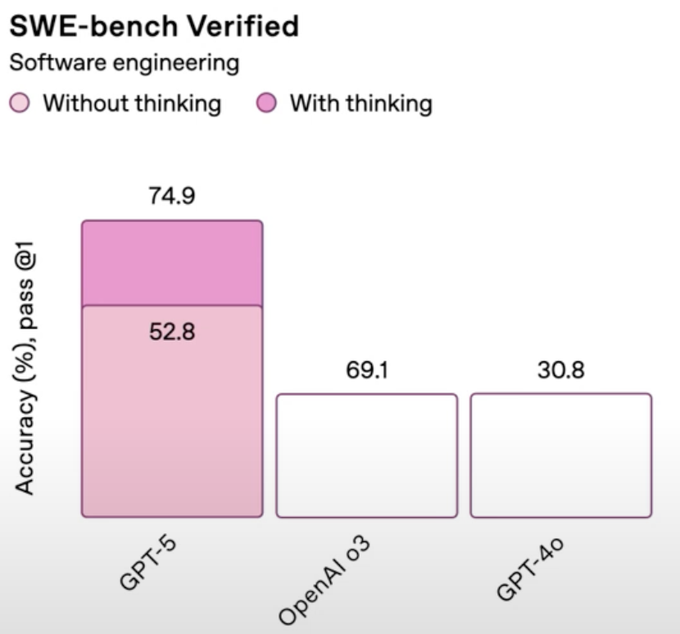

A recent example: during the GPT‑5 presentation there was a bar chart where the heights did not match the values.

👉 A perfect reminder that you should validate, not just trust.

🧩 Quick explanation#

When a model generates text, code, or graphics, it doesn’t “understand” things the way a person would.

It can produce results that are convincing but incorrect, because its goal is to generate something that seems coherent, not necessarily that it actually is.

That’s why it’s essential to review, cross-check, and apply your own judgement.

More information at the link 👇

Also published on LinkedIn.