🧠 Is your AI losing the thread? The problem of “Context Rot”#

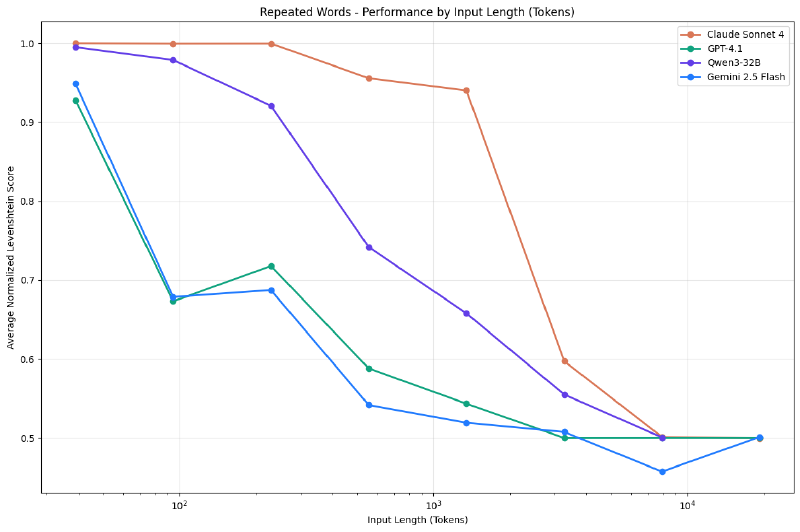

As LLMs’ context windows grow, a new technical challenge emerges: Context Rot (Context Degradation). 📉

Chroma has researched how the relevance of information “rots” or is lost when we flood the model with too much irrelevant data, affecting accuracy and reasoning.

Key takeaways from the research:#

- 🚀 More is not always better: A giant context window can introduce noise.

- ⚖️ Density vs. Size: The key is not how much information fits, but how valuable it is.

- 🔍 Smart Retrieval: The need for systems that filter the essentials before delivering them to the AI.

🐣 In a nutshell#

Imagine you’re studying for an exam and you have a 1,000-page book. If you try to read and remember all 1,000 pages at once right before the test, you’re likely to forget important details or get confused.

Context Rot is what happens to AI when you give it “too much book” and little guidance: it starts making mistakes because it can’t tell what’s vital and what’s filler. The solution is not to give it a bigger book, but to help it find the exact page it needs.

More information at the link 👇