🚀 Deep Learning from scratch… and without TensorFlow or PyTorch!#

This approach proposes building deep neural networks using only Numpy, with an architecture that is more explainable, faster, robust, and easier to tune.

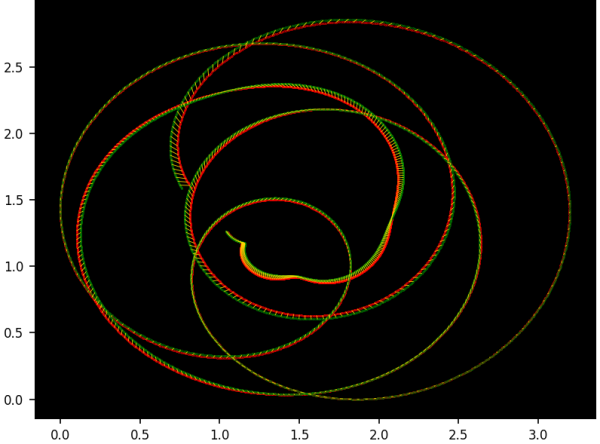

The most innovative aspects: techniques like equalization, chaotic gradient descent and ghost parameters to speed up and stabilize training.

🔟 10 Key Features to boost DNNs#

- 🔁 Reparameterization — Change the shape of parameters to improve stability or flexibility.

- 👻 Ghost parameters — “Ghost” parameters that smooth the descent and enable watermarking.

- 🧱 Layer flattening — Optimize all layers at once, reducing error propagation.

- 🪜 Sub-layers — Optimize by parameter blocks, useful in high-dimensional settings.

- 🐝 Swarm optimization — Multiple particles exploring the space to avoid local minima.

- 🔥 Decaying entropy — Allow controlled ascents to escape gradient traps.

- 🎚️ Adaptive loss — The loss function changes dynamically to reactivate learning.

- ⚖️ Equalization — Temporarily transform the output to accelerate convergence.

- 📏 Normalized parameters — Everything in [0,1] to prevent gradient explosions.

- 🧮 Math-free gradient — Gradients without formulas: only data and precomputed tables.

🧠 Explanation in brief#

If you’re new to this, imagine a neural network as a machine that learns patterns.

This approach proposes:

- Using simple nonlinear functions instead of traditional layers.

- Adding “tricks” that help the model learn faster and avoid getting stuck.

- Temporarily transforming the output so learning is more efficient.

- Keeping the code minimal and transparent, without heavyweight frameworks.

The result: faster models that are more explainable and easier to tune.

More information at the link 👇

Also published on LinkedIn.